Published: Friday, October 3 2025

Last updated: Tuesday, October 14 2025

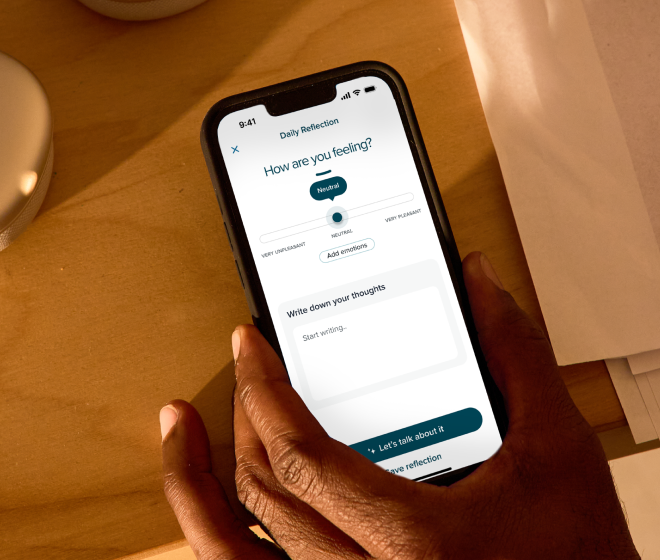

Is Artificial Intelligence for Mental Health Safe?

Written by: Harris Strokoff, MD

Is AI in mental health care safe? The simple answer is: it depends.

The conversation about AI in our field often feels binary: either we embrace the technology and fundamentally change the nature of care, or we cling to our current workflows and fail to meet the overwhelming demand.

As a clinician, I have experienced the supportive role AI can play. Throughout my career, I've seen up close how easy it is for clinicians to burn out, which can lead to access and quality issues for patients in need of treatment. That’s where AI scribe technology comes in. By automating the administrative burdens of note-taking and paperwork, utilizing AI scribe technology can give providers back their energy to focus on the core of therapy itself: the human connection.

Not all mental health AI is created equal. At SonderMind, tools are built with licensed clinicians, which ground them in evidence-informed, therapist-approved treatment plans. These tools are here to support the most essential relationship between a client and their provider.

Our responsibility: Ethical integration and control

From a medical and ethical standpoint, the provider’s primary responsibility when integrating any technology is to ensure it is safe, secure, and transparent. Providers must vet every tool to confirm it's HIPAA-compliant and as secure as the top electronic medical records systems (EMRs) in our field.

SonderMind supports this by designing systems to meet the highest standards for healthcare privacy. SonderMind is committed to "privacy by design," meaning data is handled with the utmost care, from dual encryption to de-identification before it’s even used for model training.

The clinician’s role requires maintaining full control over any AI-generated content. This is non-negotiable. That’s why a critical feature for any clinical AI tool must be a mandatory provider sign-off on everything it generates. The provider must remain the ultimate authority on what is used in a clinical context.

SonderMind’s AI scribe features, such as drafting notes and treatment plans, are designed as editable drafts. This empowers the provider to review, modify, and ultimately approve the content. The AI does some of the heavy lifting, but the provider retains all the control.

Accountability for errors

Given that any technology can make an error, establishing clear accountability is essential. When a patient is harmed, the ultimate responsibility rests with the clinician—who is simply assisted by the mental health AI. This is precisely why that clinician's sign-off is so vital. SonderMind’s oversight model addresses this by ensuring provider accountability is integrated into its process.

SondeMind’s AI Governance Committee, made up of clinical, compliance, and technical experts, reviews and approves every AI feature before it launches. They also conduct annual audits to detect and correct any bias or harmful outcomes. By logging usage and oversight, SonderMind maintains a clear audit trail that reinforces transparency in the use of AI.

Addressing the provider shortage

From my clinical perspective, AI can safely and ethically address the growing provider shortage without compromising the quality of care. By handling administrative burdens like note-taking and drafting therapist-approved treatment plans, AI scribe tools directly combat clinician burnout—a major factor contributing to the shortage. This is how we can ethically tackle the access crisis: by making human-delivered care more efficient without ever compromising its quality.

So, is artificial intelligence for mental health safe?

The answer is yes, when it is provider-controlled with provider sign-off.

The safety isn't in the technology itself, but in whether the system it operates within prioritizes the therapeutic alliance and human oversight above everything else, and when it is utilized responsibly by the provider. SonderMind’s AI tools are built with licensed therapists, are subject to rigorous clinical review, and require provider sign-off—this allows these tools to become powerful assistants that reduce provider burden and enhance the care providers give.

AI in mental health care should be clinician-led, not AI-led. The tools must help humans spend more time with other humans and with the natural world, not increase the time we spend interacting with technology at the expense of real-world connection. We have strong evidence that the former improves mental health outcomes, whereas the latter may be making us a sicker society.

SonderMind's commitment to a clinician-guided development process and an emphasis on individual provider approval of AI tool utilization sets the standard for AI tool development. This approach maximizes safety while allowing improvement in quality of care.

5 Sources

- Retrieved from https://www.sondermind.com/resources/articles-and-content/forest-bathing/

- Retrieved from https://www.sondermind.com/resources/articles-and-content/7-tips-for-building-a-strong-relationship-with-your-therapist/

- Retrieved from https://www.sondermind.com/resources/articles-and-content/finding-a-therapist-how-sondermind-connects-you-to-therapists-who-are-right-for-you/

- Retrieved from https://advisera.com/27001academy/what-is-iso-27001/

- Retrieved from https://www.sondermind.com/resources/articles-and-content/how-ai-is-changing-therapy/